Social networks are failing to tackle coronavirus-related anti-vaccination posts containing "clearly harmful information" even after the material is brought to their attention, according to a campaign group.

It flagged more than 900 examples to Facebook, Instagram, Twitter and YouTube via a team of volunteers.

It said the firms did not remove or otherwise deal with 95% of the cases.

The four platforms each have policies designed to restrict such content.

The Center for Countering Digital Hate (CCDH) said UK lawmakers should accelerate existing plans to hold the companies to account as a consequence.

The US firms were shown a copy of the report ahead of its publication.

It contains selected examples. But CCDH has yet to disclose the full list of links of the posts involved, although it has pledged to do so on request now that the report is out.

This has limited the US companies' ability to address the specific cases. But the firms have said they have removed and labelled millions of other items since the virus was declared a public health emergency.

Vaccination rules

None of the tech firms involved forbid users from posting inaccurate information about vaccinations.

However, after a series of measles outbreaks in 2019, Facebook - which owns Instagram - said it would start directing users to reliable information from the World Health Organization (WHO) when they searched or visited relevant content.

Twitter and YouTube also took measures the same year to steer users away from related conspiracy theories.

However, the platforms tightened their rules after the outbreak of Covid-19.

Facebook said it would remove posts that could lead to physical harm, and would apply warning labels to other relevant posts debunked by fact-checkers.

Twitter said it would remove coronavirus-related posts that could cause widespread panic and/or social unrest, and add warning messages to other disputed or misleading information about the pandemic.

And YouTube said it had banned content about Covid-19 that posed a "serious risk of egregious harm" or contradicted medical information given by the WHO and local health authorities.

Conspiracy theories

CCDH said a total of 912 items posted by anti-vaccine protesters that it had judged to have fallen foul of the companies' Covid-19 rules were flagged to the firms between July and August.

Of these:

- Facebook received 569 complaints. It removed 14 posts, added warnings to 19 but did not suspend any accounts - representing action on 5.8% of the cases

- Instagram received 144 complaints. It removed three posts, suspended one account and added warnings to two posts - representing action on 4.2% of the cases

- Twitter received 137 complaints. It removed four posts, suspended two accounts, and did not add any warnings - representing action on 4.4% of the cases

- YouTube received 41 complaints. It did not act on any of them

Among the examples of material that CCDH said was not tackled were:

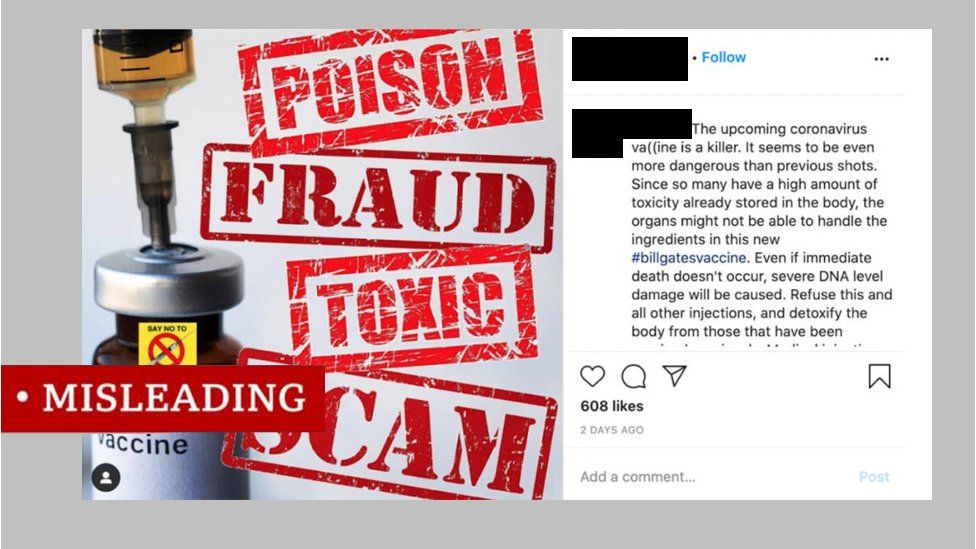

- an Instagram post saying the "upcoming coronavirus vaccine is a killer" that would cause "DNA-level damage"

- a Facebook post claiming the only way to catch a virus was to be "injected with one via a vaccine"

- a tweet saying that vaccines cause people to become genetically modified and thus no longer the way God intended

- a YouTube clip in which an interviewee claims that Covid is part of a "depopulation agenda" and that vaccines cause cancer

CCDH added that about one in 10 of all examples made reference to conspiracy theories involving Microsoft co-founder Bill Gates, including suggestions that he wanted people to be fitted with microchips that would cause them to starve if they refused a vaccine.

'Aggressive steps'

Facebook responded by saying it had taken "aggressive steps to limit the spread of misinformation" about Covid-19, including the removal of more than seven million items and the addition of warning labels to a further 98 million pieces of misinformation.

Twitter said that while it did not take action on every tweet containing disputed information about the virus, it did prioritise those that had a call to action that could cause harm.

"Our automated systems have challenged millions of accounts which were targeting discussions around Covid-19 with spammy or manipulative behaviours," it added.

And Google said that it had taken a number of steps to "combat harmful misinformation", including banning some clips and displaying fact-checking panels alongside others.

But CCDH's chief executive said politicians and regulators in the US and UK must now force the firms into tougher action.

"This is an immediate crisis, with a ticking time bomb about to go off in our societies," said Imran Ahmed.

"Social media companies... do not listen to polite requests for change. Given the acute nature of the coronavirus crisis, their failure to act must now be met with real consequences."

London-based CCDH is funded by the Pears Foundation, Joseph Rowntree Charitable Trust and Barrow Cadbury Trust, as well as others the group says do do not want to be named in case they are targeted as a result.

Public health experts warn that coronavirus vaccine conspiracy theories spread quickly online and pose a grave threat to all of us.

If a significant number of people decide not to take a safe and approved vaccine, our ability to suppress the disease will be limited.

It's legitimate to have concerns that a vaccine is safe and properly tested. It's also legitimate to want to discuss this in private chats or online.

But claims a coronavirus vaccine will be a tool for mass surveillance or genocide only do harm.

The theories are often spread by popular pseudo-science figures and large Facebook pages notorious for promoting disinformation.

They then drip into the average person's feeds and are sometimes amplified by celebrities, sowing seeds of doubt.

The social media firms say they are taking action, but critics say that if they don't do more - whether voluntarily or under compulsion - they could contribute to a public health disaster.